Akka Tutorials

In this section, we will show how to use Akka to help you build scalable and reactive applications.

If you are not familiar with IntelliJ and Scala, feel free to review our previous tutorials on IntelliJ and Scala. So let's get started!

Source Code:

- The source code is available on the allaboutscala GitHub repository.

Introduction:

Akka Actors:

- Actor System Introduction

- Tell Pattern

- Ask Pattern

- Ask Pattern mapTo

- Ask Pattern pipeTo

- Actor Hierarchy

- Actor Lookup

- Child actors

- Actor Lifecycle

- Actor PoisonPill

- Error Kernel Supervision

Akka Routers:

Akka Dispatchers:

- Akka Default Dispatcher

- Akka Lookup Dispatcher

- Fixed Thread Pool Dispatcher

- Resizable Thread Pool Dispatcher

- Pinned Thread Pool Dispatcher

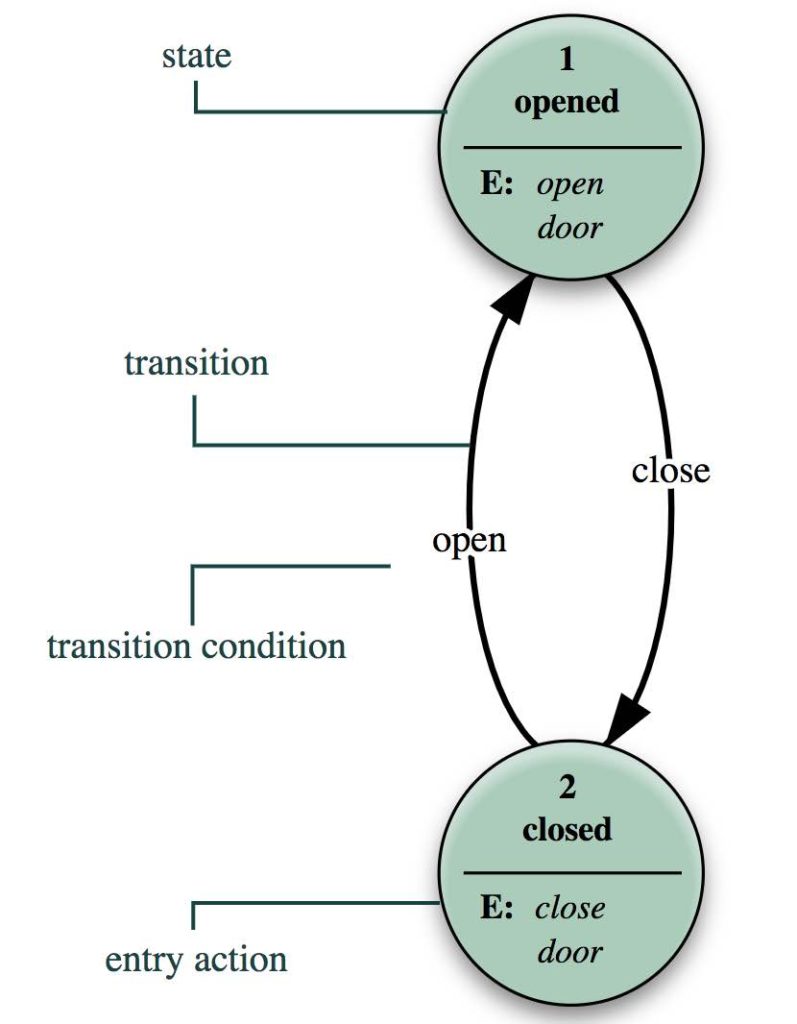

Akka FSM:

- Actor FSM become()

- Actor FSM unbecome()

- Actor FSM protocol

- Actor LoggingFSM

- Actor LoggingFSM Part Two

- Actor LoggingFSM Part Three

- Actor LoggingFSM Part Four

- Actor LoggingFSM Part Five

- Actor LoggingFSM Part Six

- Actor FSM Scheduler

Akka TestKit:

- Testing Actor FSM

- Testing Actor

- Testing Akka HTTP POST

- Testing Query Parameter

- Testing Required Query Parameter

- Testing Optional Query Parameter

- Testing Typed Query Parameter

- Testing CSV Query Parameter

Akka HTTP:

- Akka HTTP project setup build.sbt

- Start Akka HTTP server

- HTTP GET plain text

- HTTP GET JSON response

- JSON encoding

- JSON pretty print

- HTTP POST JSON payload

- Could not find implicit value

- HTTP DELETE restriction

- Future onSuccess

- Future onComplete

- Complete with an HttpResponse

- Try failure using an HttpResponse

- Global rejection handler

- Global exception handler

- Load HTML from resources

- RESTful URLs with segment

- RESTful URLs with regex

- RESTful URLs multiple segments

- Query parameter

- Optional query parameter

- Typed query parameters

- CSV query parameter

- Query parameter to case class

- HTTP request headers

- HTTP client GET

- Unmarshal HttpResponse to case class

- HTTP client POST JSON

- Akka HTTP CRUD project

- Akka HTTP CRUD project - part 2

- Akka HTTP CRUD project - part 3

What is Akka?

Akka is a suite of modules which allows you to build distributed and reliable systems by leaning on the actor model.

The actor model puts emphasis on avoiding the use of locks in your system, in favour of parallelism and concurrency. As a result, actors are those 'things' that would 'react' to messages, and perhaps run some computation and/or respond to another actor via message passing. The actor model has been around for a while, and was certainly made popular by languages such as Erlang.

Akka brings similar features around concurrency and parallelism onto the JVM, and you can use either Java or Scala with the Akka libraries. Without any surprises, our Akka code snippets below will be making use of Scala :)

For the purpose of this Akka tutorial, we will be using the latest version which is currently 2.5.12. All the links to the official Akka documentation will also refer to version 2.5.12.

The Akka eco-system has evolved fairly rapidly over the past few years. Below is a quick overview of the various Akka modules for which we will provide code snippets:

Akka Actor:

This module introduces the Actor System, functions and utilities that Akka provides to support the actor model and message passing. For additional information, you can refer to the official Akka documentation on Actors.

Akka HTTP:

As the name implies, this module is typically best suited for middle-tier applications which require an HTTP endpoint. As an example, you could use Akka HTTP to expose a REST endpoint that interfaces with a storage layer such as a database. For additional information, you can refer to the official Akka documentation on Akka HTTP.

Akka Streams:

This module is useful when you are working on data pipelines or even stream processing. For additional information, you can refer to the official Akka documentation on Akka Streams.

Akka Networking:

This module provides the foundation for having actor systems being able to connect to each other remotely over some predefined network transport such as TCP. For additional information, you can refer to the official Akka documentation on Akka Networking.

Akka Clustering:

This module is an extension of the Akka Networking module. It is useful in scaling distributed applications by have actors form a quorum and work together by some predefined membership protocol. For additional information, you can refer to the official Akka documentation on Akka Clustering.

Project setup build.sbt

As a reminder, we will be using the latest version of Akka, which is 2.5.12, as per this writing. Below is a code snippet of the library dependencies in your build.sbt to import the Akka modules. Note that we're also making use of Scala 2.12.

scalaVersion := "2.12.4"

libraryDependencies ++= Seq(

"com.typesafe.akka" %% "akka-actor" % "2.5.12",

"com.typesafe.akka" %% "akka-testkit" % "2.5.12" % Test

)

Actor System Introduction

In this tutorial, you will create your first Akka ActorSystem. For the purpose of this tutorial, you can think of the ActorSystem as a 'black' box that will eventually hold your actors, and allow you to interact with them.

As a reminder, the actor model puts message passing as a first class citizen. If the actor model is still obscure at this time, that's OKAY. In the upcoming tutorials, we will demonstrate how to design protocols that our actors will understand and react to.

Defining our actor system using Akka is super easy. You simply need to create an instance of akka.actor.ActorSystem. In the code snippet below, we will give our actor system a name: DonutStoreActorSystem.

println("Step 1: create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

For the purpose of this tutorial, we are not doing much with our actor system :( But, it is important to show that you can also close it by calling the terminate() method. Obviously, you would perhaps want to close your actor system when your application is shutting down, similar to how you would cleanly close any open resources such as database connections.

println("\nStep 2: close the actor system")

val isTerminated = system.terminate()

For completeness, we'll also register a Future onComplete() callback to know that our actor system was successfully shutdown. If you are not familiar with registering future callbacks, feel free to review our tutorial on Future onComplete().

println("\nStep 3: Check the status of the actor system")

isTerminated.onComplete {

case Success(result) => println("Successfully terminated actor system")

case Failure(e) => println("Failed to terminate actor system")

}

Thread.sleep(5000)

Tell Pattern

In this tutorial, we will create our first Akka Actor, and show how to design a simple message passing protocol to interact with our Actor. As a matter of fact, Akka provides various interaction patterns, and the one which we will use in this tutorial is called the Tell Pattern. This pattern is useful when you need to send a message to an actor, but do not expect to receive a response. As a result, it is also commonly referred to as "fire and forget".

As per the previous Actor System Introduction tutorial, we instantiate an ActorSystem, and give it the name DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

Next, we design a simple message passing protocol, and use a Case Class to encapsulate the message. As such, we create a case class named Info, and for now it holds a single property: name of type String.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

Creating an Akka Actor is really easy. All you have to do is have a class extend the Actor trait. Akka also comes built-in with a logging utility for actors, and you can access it by simply adding the ActorLogging trait.

Inside our actor, the primary method we are interested in at the moment is the receive method. The receive method is the place where you instruct your actor which messages or protocols it is designed to react to. For our DonutInfoActor below, it will react to Info messages, where the actor will simply print the name property.

println("\nStep 3: Define DonutInfoActor")

class DonutInfoActor extends Actor with ActorLogging {

import Tutorial_02_Tell_Pattern.DonutStoreProtocol._

def receive = {

case Info(name) =>

log.info(s"Found $name donut")

}

}

As of now, the DonutStoreActorSystem was empty. Let's go ahead and use the actorOf() method to create our DonutInfoActor.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

To send a message to our DonutInfoActor using the Akka Tell Pattern, you can use the bang operator ! as shown below.

println("\nStep 5: Akka Tell Pattern")

import DonutStoreProtocol._

donutInfoActor ! Info("vanilla")

Finally, we close our actor system by calling the terminate() method.

println("\nStep 6: close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/22/2018 15:40:33.443] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found vanilla donut

Ask Pattern

In this tutorial, we will show another interaction pattern for Akka actors, namely the Ask Pattern. This pattern allows you to send a message to an actor, and get a response back. A reminder from our previous tutorial that with the Akka Tell Pattern, you do not get back a reply from the actor.

We start by creating an ActorSystem and, similar to the previous examples, we'll name it DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

For now, we'll keep the message passing protocol unchanged from our previous examples. Our message is represented by the case class named Info, and it has a single property: name of type String.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

Similar to the Tell Pattern example, creating an Akka actor is as easy as extending the Actor trait, and implementing the receive method. Within the receive body, we will use the sender method to reply back to the source where the message was originated. In our simple example below, we return true, for cases when the name property of the Info message is vanilla, and we return false, for all other value of the name property.

println("\nStep 3: Create DonutInfoActor")

class DonutInfoActor extends Actor with ActorLogging {

import Tutorial_03_Ask_Pattern.DonutStoreProtocol._

def receive = {

case Info(name) if name == "vanilla" =>

log.info(s"Found valid $name donut")

sender ! true

case Info(name) =>

log.info(s"$name donut is not supported")

sender ! false

}

}

To create the DonutInfoActor within our DonutStoreActorSystem, we use the actorOf() method as shown below.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

To use the Akka Ask Pattern, you have to make use of the ? operator. As a reminder, for the Akka Tell Pattern, we used the ! operator. The Ask Pattern will return a future, and for the purpose of this example, we will use a for comprehension to print back the response from the DonutInfoActor. If you are new to using Futures in Scala, feel free to review our Futures tutorial to learn about creating asynchronous non-blocking operations using futures.

println("\nStep 5: Akka Ask Pattern")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaDonutFound = donutInfoActor ? Info("vanilla")

for {

found <- vanillaDonutFound

} yield (println(s"Vanilla donut found = $found"))

val glazedDonutFound = donutInfoActor ? Info("glazed")

for {

found <- glazedDonutFound

} yield (println(s"Glazed donut found = $found"))

Thread.sleep(5000)

Finally, we call the terminate() method to shutdown our Actor System.

println("\nStep 6: Close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/22/2018 21:37:20.381] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found valid vanilla donut

Vanilla donut found = true

[INFO] [06/22/2018 21:37:20.391] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor] glazed donut is not supported

Glazed donut found = false

Ask Pattern mapTo

This tutorial is an extension from our previous example on Akka's Ask Pattern. The actor system, protocol and actor remain identical to the code snippet we've used in the Ask Pattern tutorial. We will instead focus this tutorial on the Future.mapTo() method. It can be used to provide a type to the future returned by the Ask Pattern.

Similar to our previous examples, we create an ActorSystem named DonutStoreActorSystem which will hold our actors.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

We keep our simple Info case class which represents the message that our actor will react to.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

The DonutInfoActor is unchanged from our previous Akka Ask Pattern example. The actor reacts to messages of type Info. It will send a Boolean reply value of true, when the name property is vanilla, and a value of false, for all other values of the name property.

println("\nStep 3: Create DonutInfoActor")

class DonutInfoActor extends Actor with ActorLogging {

import Tutorial_04_Ask_Pattern_MapTo.DonutStoreProtocol._

def receive = {

case Info(name) if name == "vanilla" =>

log.info(s"Found valid $name donut")

sender ! true

case Info(name) =>

log.info(s"$name donut is not supported")

sender ! false

}

}

Using the actorOf() method, we create a DonutInfoActor into our actor system.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

Using the Future.mapTo() method, we can map the return type from the actor to a specific type. In our example, the return type is mapped to the Boolean type.

println("\nStep 5: Akka Ask Pattern and future mapTo()")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaDonutFound: Future[Boolean] = (donutInfoActor ? Info("vanilla")).mapTo[Boolean]

for {

found <- vanillaDonutFound

} yield println(s"Vanilla donut found = $found")

Thread.sleep(5000)

Finally, we call the terminate() method to shutdown our actor system.

println("\nStep 6: close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/22/2018 20:34:36.144] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found valid vanilla donut

Vanilla donut found = true

Ask Pattern pipeTo

We continue our discussion on the Akka Ask Pattern, and will show another handy utility named pipeTo(). It attaches to a Future operation by registering the Future andThen callback to allow you to easily send the result back to the sender. Additional details on using pipeTo() can be found in the official Akka documentation.

As per our previous example, we create an actor system named DonutStoreActorSystem which will hold our Akka actors.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

In this step, we will augment our message passing protocol by defining another feature named CheckStock. In a real-life application, you could imagine that the CheckStock message would check the stock quantity for a given donut.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

case class CheckStock(name: String)

}

We create a DonutStockActor which will react to a CheckStock message. It will then delegate the call to a future operation named findStock(), which does the actual stock lookup. In order to send the result back to the sender, we use the handy pipeTo() method as shown below.

println("\nStep 3: Create DonutStockActor")

class DonutStockActor extends Actor with ActorLogging {

import Tutorial_05_Ask_Pattern_pipeTo.DonutStoreProtocol._

def receive = {

case CheckStock(name) =>

log.info(s"Checking stock for $name donut")

findStock(name).pipeTo(sender)

}

def findStock(name: String): Future[Int] = Future {

// assume a long running database operation to find stock for the given donut

100

}

}

We create the DonutStockActor into our DonutStoreActorSystem by calling the actorOf() method.

println("\nStep 4: Create DonutStockActor")

val donutStockActor = system.actorOf(Props[DonutStockActor], name = "DonutStockActor")

Similar to the previous mapTo() example, we use the Akka Ask Pattern to check the donut stock. Note that with the mapTo() method, we are mapping the return type from the actor to a specific type (in this case, an Int type).

println("\nStep 5: Akka Ask Pattern using mapTo() method")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaDonutStock: Future[Int] = (donutStockActor ? CheckStock("vanilla")).mapTo[Int]

for {

found <- vanillaDonutStock

} yield println(s"Vanilla donut stock = $found")

Thread.sleep(5000)

Finally, we close our actor system by calling the terminate() method.

println("\nStep 6: Close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/26/2018 20:47:55.499] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

Vanilla donut stock = 100

Actor Hierarchy

In this tutorial, we will re-use the Tell Pattern example, and discuss a bit further Akka Actor Hierarchy. As a reminder, the Actor Model favours a design principle of using actor-to-actor message passing, as we've seen in the previous code snippets. As such, it is important to note that the actors within our Actor System exist in a hierarchy.

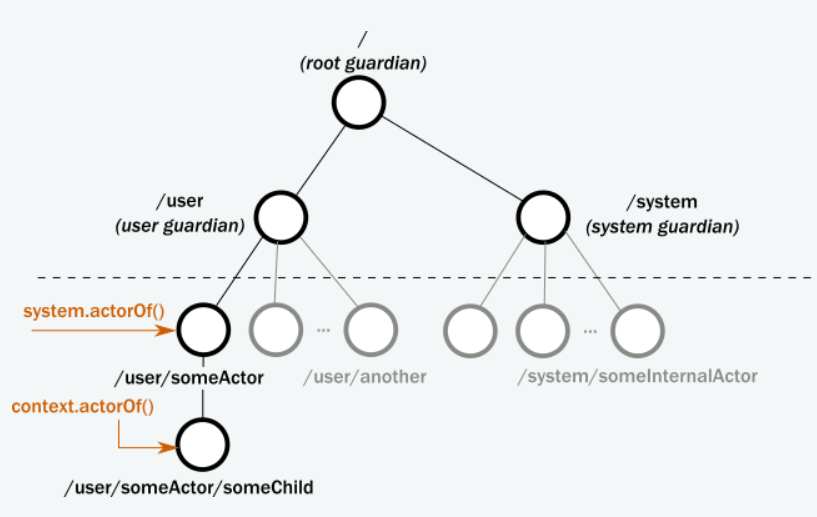

The diagram below from the official Akka documentation provides a good visual overview of the actor hierarchy as supported by Akka.

At the top of the hierarchy is the root guardian. You can think of it as the "top-most" actor which monitors the entire actor system. Next is the system guardian, which is the "top-level" actor in charge of any system level actors. On the left hand side, you will notice the user guardian, and this is the "top-level" actor hierarchy for actors that we create in our actor system. Note also that you can nest hierarchies as shown by the someChild actor.

For now, let's focus on the user guardian. If you recall from the Tell Pattern example, the DonutInfoActor was logging the statement below when it received an Info message:

[INFO] [05/09/2018 11:36:47.095] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found vanilla donut

From the above log statement, you can see the hierarchy for DonutInfoActor: akka://DonutStoreActorSystem/user/DonutInfoActor. For lack of a better comparison, think of the path to DonutInfoActor similar to a path or location for some file in a file system. As such, DonutInfoActor is under the user guardian, and our actor system is also displayed by the name: DonutStoreActorSystem (which is the name we used when creating the actor system).

Understanding actor hierarchies is important to help us design systems which follow the Fail-Fast principle. In upcoming tutorials, we will show how to design actor systems to isolate failures, and plan ahead with recovery options. What we've learned on actor hierarchies will be the foundation to design resilient actor systems that can deal with failures.

Actor Lookup

In this tutorial, we will continue our discussion on Actor Hierarchy, and show how we can use an actor's path to find it within an actor system. For the purpose of this example, we will reuse the code snippets from our Tell Pattern tutorial. You can find additional information on Actor paths from the official Akka documentation on Actor Paths and Addressing.

By now, you should be familiar with creating an Actor System as shown below:

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

Next, we define a simple protocol for message passing with our actor.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

In this step, we create a DonutInfoActor by extending the akka.actor trait.

println("\nStep 3: Define DonutInfoActor")

class DonutInfoActor extends Actor with ActorLogging {

def receive = {

case Info(name) =>

log.info(s"Found $name donut")

}

}

With our DonutInfoActor defined from Step 3, we create it in our actor system by using actorOf() method.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

In the Tell Pattern example, we used the reference to the DonutInfoActor to send an Info message to it using the bang operator !.

println("\nStep 5: Akka Tell Pattern")

import DonutStoreProtocol._

donutInfoActor ! Info("vanilla")

In the previous tutorial on Actor Hierarchy, we explained that the actors we create in our actor system reside under the /user/ hierarchy. As such, we should be able to find the DonutInfoActor by its path: /user/DonutInfoActor. Similar to an ActorRef, we can then use the bang operator ! to send a message to it using the Tell Pattern.

println("\nStep 6: Find Actor using actorSelection() method")

system.actorSelection("/user/DonutInfoActor") ! Info("chocolate")

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/26/2018 20:09:54.763] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found chocolate donut

As another example, you can also use the actorSelection() method to send a message to all the actors in an actor system by making use of the wildcard: /user/*

system.actorSelection("/user/*") ! Info("vanilla and chocolate")

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/26/2018 20:09:54.763] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found vanilla and chocolate donut

Perhaps, one could ask why not simply make use of the ActorRef, which we've seen so far when creating the Actor using system.actorOf() method. Another feature that Akka provides is that actor systems can be local within a JVM, or remotely hosted on a JVM. The message passing between actors is transparent to the end user, and Akka handles the underlying transport. In upcoming tutorials, we will see examples of remote actors.

Finally, we use the terminate() method to close our actor system.

println("\nStep 7: close the actor system")

val isTerminated = system.terminate()

Child Actors

In this tutorial, we will continue our journey into better understanding Actor hierarchies and paths. More precisely, we will introduce child actors, i.e. an actor which is spawn (and potentially managed and supervised) from within an actor. For now, let's not focus on the supervision, as we will discuss Akka Actor supervision strategies in upcoming tutorials.

As the usual first step, we create an actor system named DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

We then define the protocol for message passing with our actors.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

In this step, we will create a new actor named BakingActor by extending the usual akka.actor trait. This actor will be a child actor of DonutInfoActor below.

println("\nStep 3: Define a BakingActor and a DonutInfoActor")

class BakingActor extends Actor with ActorLogging {

def receive = {

case Info(name) =>

log.info(s"BakingActor baking $name donut")

}

}

Creating DonutInfoActor is similar to previous examples, i.e. we extend the akka.actor trait. Note however that we need to make use of the actor context value, and will use its actorOf() method to create a BakingActor. By using the actor context value, the BakingActor will be a child actor of DonutInfoActor.

From a hierarchy point of view, we can assume that DonutInfoActor will reside under the /user hierarchy: /user/DonutInfoActor. This would mean that the child BakingActor's hierarchy and path would be /user/DonutInfoActor/BakingActor. To keep this example simple, inside the receive method of the DonutInfoActor, we will simply forward the same Info message to the BakingActor.

class DonutInfoActor extends Actor with ActorLogging {

val bakingActor = context.actorOf(Props[BakingActor], name = "BakingActor")

def receive = {

case msg @ Info(name) =>

log.info(s"Found $name donut")

bakingActor forward msg

}

}

We only need to create the DonutInfoActor as it will internally be responsible for the creation of the child actor BakingActor.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

Sending an Info message to the DonutInfoActor using the Tell Pattern (fire-and-forget message passing style), will internally forward the message to the child BakingActor.

println("\nStep 5: Akka Tell Pattern")

import DonutStoreProtocol._

donutInfoActor ! Info("vanilla")

Thread.sleep(3000)

Finally, we close the actor system by using the terminate() method.

println("\nStep 6: close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/29/2018 20:46:10.626] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found vanilla donut

[INFO] [06/29/2018 20:46:10.627] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] BakingActor baking vanilla donut

Actor Lifecycle

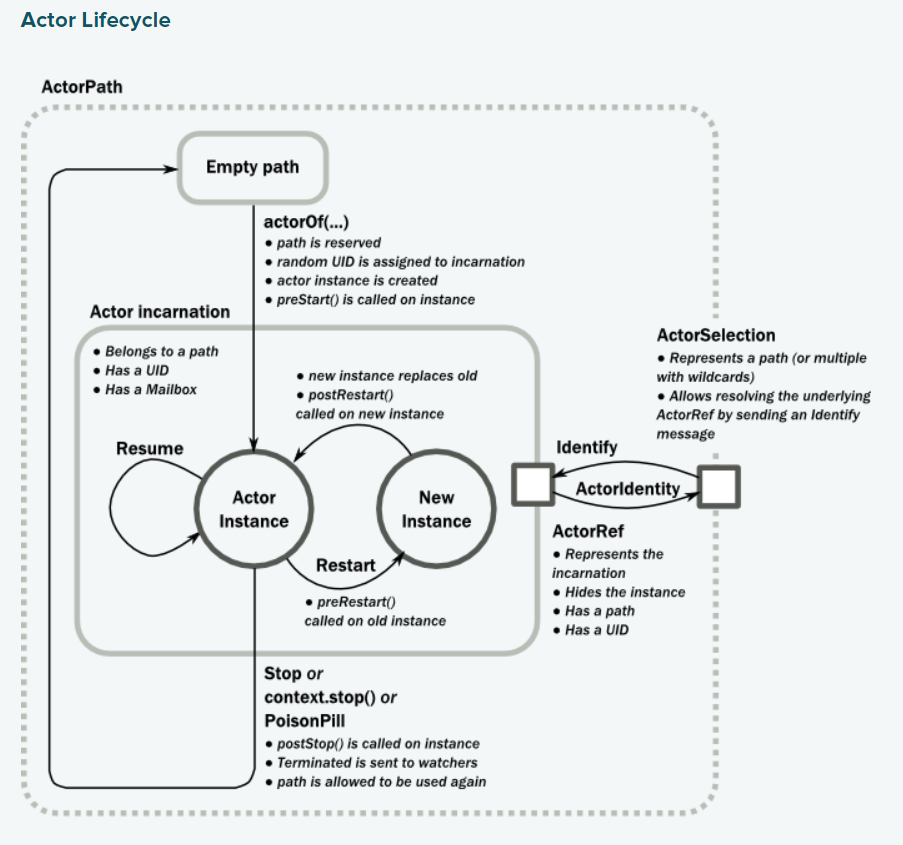

So far, we've gone through various examples on creating actors, understanding actor hierarchies and paths, and creating child actors. It is perhaps a good place to introduce Actor Lifecycles. Every Akka actor within our actor system follows a lifecycle, which represents the main events of an Actor from creation to deletion. For that matter, knowing when an Actor is about to start is perhaps important in a real-life application, where you may need to open a connection to a database session. Similarly, you should close the database session if the actor is stopped or crashed.

The diagram below from the official Akka documentation provides a good visual description of Actor lifecycle.

We create a DonutStoreActorSystem similar to the previous tutorials.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

We define the protocol for message passing with our Actors.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

In the BackingActor, we override the Actor lifecycle events namely preStart(), postStop(), preRestart() and postRestart() methods.

println("\nStep 3: Define a BakingActor and a DonutInfoActor")

class BakingActor extends Actor with ActorLogging {

override def preStart(): Unit = log.info("prestart")

override def postStop(): Unit = log.info("postStop")

override def preRestart(reason: Throwable, message: Option[Any]): Unit = log.info("preRestart")

override def postRestart(reason: Throwable): Unit = log.info("postRestart")

def receive = {

case Info(name) =>

log.info(s"BakingActor baking $name donut")

}

}

Similar to the BackingActor, we override the Actor lifecycle events, and will simply log a message to know which event is being triggered during the life-time of our actor.

class DonutInfoActor extends Actor with ActorLogging {

override def preStart(): Unit = log.info("prestart")

override def postStop(): Unit = log.info("postStop")

override def preRestart(reason: Throwable, message: Option[Any]): Unit = log.info("preRestart")

override def postRestart(reason: Throwable): Unit = log.info("postRestart")

val bakingActor = context.actorOf(Props[BakingActor], name = "BakingActor")

def receive = {

case msg @ Info(name) =>

log.info(s"Found $name donut")

bakingActor forward msg

}

}

We create the DonutInfoActor by using the system.actorOf() method.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

Using the Tell Pattern, we send an Info message to the DonutInfoActor.

println("\nStep 5: Akka Tell Pattern")

import DonutStoreProtocol._

donutInfoActor ! Info("vanilla")

Thread.sleep(5000)

Finally, we close the actor system by calling the terminate() method.

println("\nStep 6: close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [06/29/2018 21:26:19.880] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] prestart

[INFO] [06/29/2018 21:26:19.880] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor] prestart

[INFO] [06/29/2018 21:26:19.882] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found vanilla donut

[INFO] [06/29/2018 21:26:19.883] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] BakingActor baking vanilla donut

[INFO] [06/29/2018 21:26:24.885] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] postStop

[INFO] [06/29/2018 21:26:24.886] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutInfoActor] postStop

Actor PoisonPill

We continue our discussion from the previous Actor Lifecycle tutorial. In the last example, we showed how you can override actor events such as the preStart() or postStop() methods. In this tutorial, we will show how you can use akka.actor.PoisonPill, which is a special message that you send to terminate or stop an actor. When the PoisonPill message has been received by the actor, you will be able to see the actor stop event being triggered from our log messages.

We create a DonutStoreActorSystem similar to previous tutorials.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

Next, we define the protocol for message passing with our Actors.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

}

In the BackingActor, we override the Actor lifecycle events namely the preStart(), postStop(), preRestart() and postRestart() methods.

println("\nStep 3: Define a BakingActor and a DonutInfoActor")

class BakingActor extends Actor with ActorLogging {

override def preStart(): Unit = log.info("prestart")

override def postStop(): Unit = log.info("postStop")

override def preRestart(reason: Throwable, message: Option[Any]): Unit = log.info("preRestart")

override def postRestart(reason: Throwable): Unit = log.info("postRestart")

def receive = {

case Info(name) =>

log.info(s"BakingActor baking $name donut")

}

}

Similar to the BackingActor, we override the Actor lifecycle events, and will simply log a message to know which event is being triggered during the life-time of our actor.

class DonutInfoActor extends Actor with ActorLogging {

override def preStart(): Unit = log.info("prestart")

override def postStop(): Unit = log.info("postStop")

override def preRestart(reason: Throwable, message: Option[Any]): Unit = log.info("preRestart")

override def postRestart(reason: Throwable): Unit = log.info("postRestart")

val bakingActor = context.actorOf(Props[BakingActor], name = "BakingActor")

def receive = {

case msg @ Info(name) =>

log.info(s"Found $name donut")

bakingActor forward msg

}

}

We create the DonutInfoActor by using the system.actorOf() method.

println("\nStep 4: Create DonutInfoActor")

val donutInfoActor = system.actorOf(Props[DonutInfoActor], name = "DonutInfoActor")

Using the Tell Pattern, we send an Info message to the DonutInfoActor, and then we will send a PoisonPill message to the actor. Following the PosionPill message, we will try and send another Info message to the actor.

println("\nStep 5: Akka Tell Pattern")

import DonutStoreProtocol._

donutInfoActor ! Info("vanilla")

Next, we send the PoisonPill message to terminate the DonutInfoActor.

donutInfoActor ! PoisonPill

donutInfoActor ! Info("plain")

Thread.sleep(5000)

Since DonutInfoActor should no longer exist within our actor system, the Info message will not be consumed. It will instead be logged into a separate dead-letters hierarchy, which is a placeholder for any message that cannot be delivered within an actor system. You can find additional information on Akka dead-letters from the official Akka documentation.

Finally, we close the actor system by calling the terminate() method.

println("\nStep 6: close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [07/03/2018 20:29:44.475] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] prestart

[INFO] [07/03/2018 20:29:44.475] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutInfoActor] prestart

[INFO] [07/03/2018 20:29:44.477] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutInfoActor] Found vanilla donut

[INFO] [07/03/2018 20:29:44.477] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] BakingActor baking vanilla donut

[INFO] [07/03/2018 20:29:44.477] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutInfoActor/BakingActor] postStop

[INFO] [07/03/2018 20:29:44.477] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutInfoActor] postStop

[INFO] [07/03/2018 20:29:44.477] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutInfoActor] Message [com.nb.actors.Tutorial_10_Kill_Actor_Using_PoisonPill$DonutStoreProtocol$Info] without sender to Actor[akka://DonutStoreActorSystem/user/DonutInfoActor#342625185] was not delivered. [1] dead letters encountered. This logging can be turned off or adjusted with configuration settings 'akka.log-dead-letters' and 'akka.log-dead-letters-during-shutdown'.

Error Kernel Supervision

This tutorial will be a good segment to our previous discussions on Actor Hierarchy, Actor Lifecycle, and stopping actors using Actor PoisonPill. We introduce the Error Kernel Pattern, which mandates that failures are isolated and localized, as opposed to crashing an entire system. You can find additional details on the Error Kernel Approach from the official Akka documentation.

As a reminder, Akka Actors form a hierarchy, and we will use that feature to have Actors supervise and react to failures of their child actors. We will expand our previous example of DonutStockActor and have it forward work to a child actor named DonutStockWorkerActor. In addition, Since DonutStockWorkerActor is a child actor of DonutStockActor, it will be supervised by DonutStockActor.

This is the initial step of creating an Actor System named DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

We expand our DonutStoreProtocol and, add a CheckStock() case class, in addition to the previous Info() case class, which we'll use for message passing with our Actors. Moreover, we'll create a custom exception (WorkerFailedException), that we will use to show how to isolate failures by following the Error Kernel Approach.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

case class CheckStock(name: String)

case class WorkerFailedException(error: String) extends Exception(error)

}

When our DonutStockActor receive messages of type CheckStock, it will forward the request to a child actor for the actual processing of the message. The child actor is of type DonutStockWorkerActor, which we will define in Step 4. Following on the Error Kernel Approach, DonutStockActor will supervise the child actor DonutStockWorkerActor. It does so by providing a SupervisorStrategy. In this simple example, our actor will react to exceptions of type WorkerFailedException, and attempt to restart the child actor DonutStockWorkerActor. For all other exceptions, we assume that DonutStockActor is unable to handle those and, in turn, it will escalate those exceptions up the actor hierarchy.

println("\nStep 3: Create DonutStockActor")

class DonutStockActor extends Actor with ActorLogging {

override def supervisorStrategy: SupervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 3, withinTimeRange = 1 seconds) {

case _: WorkerFailedException =>

log.error("Worker failed exception, will restart.")

Restart

case _: Exception =>

log.error("Worker failed, will need to escalate up the hierarchy")

Escalate

}

val workerActor = context.actorOf(Props[DonutStockWorkerActor], name = "DonutStockWorkerActor")

def receive = {

case checkStock @ CheckStock(name) =>

log.info(s"Checking stock for $name donut")

workerActor forward checkStock

}

}

We tap into the Actor Lifecycle, and override the postRestart() method in order to know when the DonutStockWorkerActor is restarted. When the DonutStockWorkerActor receive messages of type CheckStock, it will internally delegate the work to its findStock() method. For the sake of this example, after procssing the CheckStock message, the DonutStockWorkerActor will stop processing any other messages as we're stopping it by calling context.stop(self).

Inside the findStock() method, you could assume that the operation simulates accessing an external resource, such as a database to find the stock quantity for a particular donut. In our example, we're simply returning an arbitrary Int value of 100.

println("\nStep 4: Worker Actor called DonutStockWorkerActor")

class DonutStockWorkerActor extends Actor with ActorLogging {

@throws[Exception](classOf[Exception])

override def postRestart(reason: Throwable): Unit = {

log.info(s"restarting ${self.path.name} because of $reason")

}

def receive = {

case CheckStock(name) =>

findStock(name)

context.stop(self)

}

def findStock(name: String): Int = {

log.info(s"Finding stock for donut = $name")

100

// throw new IllegalStateException("boom") // Will Escalate the exception up the hierarchy

// throw new WorkerFailedException("boom") // Will Restart DonutStockWorkerActor

}

}

Create a DonutStockActor similar to our previous tutorials.

println("\nStep 5: Define DonutStockActor")

val donutStockActor = system.actorOf(Props[DonutStockActor], name = "DonutStockActor")

We send a CheckStock message to the DonutStockActor using the Akka Ask Pattern.

println("\nStep 6: Akka Ask Pattern")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaDonutStock: Future[Int] = (donutStockActor ? CheckStock("vanilla")).mapTo[Int]

for {

found <- vanillaDonutStock

} yield (println(s"Vanilla donut stock = $found"))

Thread.sleep(5000)

Finally, we call the terminate() method to close our Actor System.

println("\nStep 7: Close the actor system")

val isTerminated = system.terminate()

You can simulate failures by uncommenting throw new IllegalStateException("boom") or throw new WorkerFailedException("boom"). As per our SupervisorStrategy from Step 3, if DonutStockWorkerActors throws an IllegalStateException, the supervisor Actor DonutStockActor will simply escalate the exception up the Actor Hierarchy. When you run the program, you should see the following statements showing the escalation:

[INFO] [05/15/2018 20:35:47.163] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [05/15/2018 20:35:47.163] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerActor] Finding stock for donut = vanilla

[ERROR] [05/15/2018 20:35:47.163] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Worker failed, will need to escalate up the hierarchy

[ERROR] [05/15/2018 20:35:47.178] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] boom

For exceptions of type WorkerFailedException, you will notice that the SupervisorStrategy will attempt to restart the child actor DonutStockWorkerActor as shown below:

[INFO] [05/15/2018 20:37:13.503] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [05/15/2018 20:37:13.503] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerActor] Finding stock for donut = vanilla

[ERROR] [05/15/2018 20:37:13.519] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutStockActor] Worker failed exception, will restart.

[ERROR] [05/15/2018 20:37:13.519] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerActor] boom

[INFO] [05/15/2018 20:37:13.519] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerActor] restarting DonutStockWorkerActor because of com.nb.actors.Tutorial_11_ErrorKernel$DonutStoreProtocol$WorkerFailedException: boom

RoundRobinPool

In this tutorial, we introduce Akka Routers. So far, we've seen how to send messages to an Akka Actor. Routing is a handy feature that Akka provides, and it allows you to send messages to a predefined set of routees. If you are not familiar with Akka routers, that's OKAY! We will provide step by step example of the various routers which Akka provides.

We expand our previous example and, instead of having DonutStockActor send messages to DonutStockWorkerActor, it will send messages to a pool of DonutStockWorkerActor. Akka also provides various routing strategies, and these determine the flow of messages from a router to its routees. In the example below, we will use the RoundRobinPool logic and, as it name implies, messages will be sent in a RoundRobin fashion among the routees.

Akka provides various other routing logic such as RandomRoutingLogic, ScatterGatherFirstCompletedRoutingLogic, TailChoppingRoutingLogic, SmallestMailboxRoutingLogic, BroadcastRoutingLogic and ConsistentHashingRoutingLogic. You can find additional details on these routing logic from the official Akka Routing Documentation.

This step should be familiar to you by now, and we create an ActorSystem named DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

We define our message passing protocols to our actors.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

case class CheckStock(name: String)

case class WorkerFailedException(error: String) extends Exception(error)

}

We create a DonutStockActor, which extends the akka.actor.Actor trait. DonutStockActor will forward messages it receives to DonutStockWorkerActor, which we'll define in Step 4. It, however, will not forward the messages directly to a single DonutStockWorkerActor as we've seen in previous examples where we've defined DonutStockWorkerActor as a child actor: val workerActor = context.actorOf(Props[DonutStockWorkerActor], name = "DonutStockWorkerActor") Instead, we define a pool of worker actors of type DonutStockWorkerActor. As a strategy for the actor pool, we're using the RoundRobinPool as shown below.

println("\nStep 3: Create DonutStockActor")

class DonutStockActor extends Actor with ActorLogging {

override def supervisorStrategy: SupervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 3, withinTimeRange = 5 seconds) {

case _: WorkerFailedException =>

log.error("Worker failed exception, will restart.")

Restart

case _: Exception =>

log.error("Worker failed, will need to escalate up the hierarchy")

Escalate

}

// We will not create one worker actor.

// val workerActor = context.actorOf(Props[DonutStockWorkerActor], name = "DonutStockWorkerActor")

// We are using a resizable RoundRobinPool.

val resizer = DefaultResizer(lowerBound = 5, upperBound = 10)

val props = RoundRobinPool(5, Some(resizer), supervisorStrategy = supervisorStrategy)

.props(Props[DonutStockWorkerActor])

val donutStockWorkerRouterPool: ActorRef = context.actorOf(props, "DonutStockWorkerRouter")

def receive = {

case checkStock @ CheckStock(name) =>

log.info(s"Checking stock for $name donut")

donutStockWorkerRouterPool forward checkStock

}

}

The DonutStockWorkerActor internally carries out the findStock() operation when it receives a message of type CheckStock.

println("\ntep 4: Worker Actor called DonutStockWorkerActor")

class DonutStockWorkerActor extends Actor with ActorLogging {

override def postRestart(reason: Throwable): Unit = {

log.info(s"restarting ${self.path.name} because of $reason")

}

def receive = {

case CheckStock(name) =>

sender ! findStock(name)

}

def findStock(name: String): Int = {

log.info(s"Finding stock for donut = $name, thread = ${Thread.currentThread().getId}")

100

}

}

We use the system.actorOf() method to create a DonutStockActor withing our ActorSystem.

println("\nStep 5: Define DonutStockActor")

val donutStockActor = system.actorOf(Props[DonutStockActor], name = "DonutStockActor")

We fire a bunch of requests to the DonutStockActor to check the stock for vanilla donut. Once DonutStockActor receives the CheckStock message, it will forward the message to one of the DonutStockWorkerActor within the actor pool which we defined in Step 3. As a reminder, we are using an actor pool with the RoundRobinPool strategy.

println("\nStep 6: Use Akka Ask Pattern and send a bunch of requests to DonutStockActor")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaStockRequests = (1 to 10).map(i => (donutStockActor ? CheckStock("vanilla")).mapTo[Int])

for {

results <- Future.sequence(vanillaStockRequests)

} yield println(s"vanilla stock results = $results")

Thread.sleep(5000)

Finally, we close our actor system using the usual system.terminate() method.

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [07/06/2018 20:26:35.785] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.787] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$a] Finding stock for donut = vanilla, thread = 17

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-6] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$b] Finding stock for donut = vanilla, thread = 18

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$c] Finding stock for donut = vanilla, thread = 19

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-6] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 18

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$e] Finding stock for donut = vanilla, thread = 13

[INFO] [07/06/2018 20:26:35.788] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$b] Finding stock for donut = vanilla, thread = 13

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-6] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$c] Finding stock for donut = vanilla, thread = 18

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 13

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$a] Finding stock for donut = vanilla, thread = 17

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$e] Finding stock for donut = vanilla, thread = 13

[INFO] [07/06/2018 20:26:35.789] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

vanilla stock results = Vector(100, 100, 100, 100, 100, 100, 100, 100, 100, 100)

ScatterGatherFirstCompletedPool

This tutorial is a continuation from our previous discussion on Akka Routers RoundRobinPool, and we introduce a new routing strategy which Akka provides: ScatterGatherFirstCompletedPool. As the name implies, messages are scattered to a bunch of routees, and only the first completed operation will be accepted. In a real life scenario, perhaps you may fan-out a request from an application to a farm of homogeneous services. As a reminder, you can find out more details from the Official Akka documentation.

We start by creating our ActorSystem named DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

We define the message passing protocols to our Actors by creating case classes Info and CheckStock.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

case class CheckStock(name: String)

case class WorkerFailedException(error: String) extends Exception(error)

}

Similar to our previous examples, we create a DonutStockActor which extends the akka.actor.Actor trait. Instead of DonutStockActor forwarding messages to a single DonutStockWorkActor, it will use a ScatterGatherFirstCompletedPool to fan-out the requests to a bunch of DonutStockWorkerActor. It will, however, only accept the reply from the first DonutStockWorkerActor (whichever first completes the operation).

println("\nStep 3: Create DonutStockActor")

class DonutStockActor extends Actor with ActorLogging {

override def supervisorStrategy: SupervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 3, withinTimeRange = 5 seconds) {

case _: WorkerFailedException =>

log.error("Worker failed exception, will restart.")

Restart

case _: Exception =>

log.error("Worker failed, will need to escalate up the hierarchy")

Escalate

}

// We will not create a single worker actor.

// val workerActor = context.actorOf(Props[DonutStockWorkerActor], name = "DonutStockWorkerActor")

// We are using a resizable ScatterGatherFirstCompletedPool.

val workerName = "DonutStockWorkerActor"

val resizer = DefaultResizer(lowerBound = 5, upperBound = 10)

val props = ScatterGatherFirstCompletedPool(

nrOfInstances = 5,

resizer = Some(resizer),

supervisorStrategy = supervisorStrategy,

within = 5 seconds

).props(Props[DonutStockWorkerActor])

val donutStockWorkerRouterPool: ActorRef = context.actorOf(props, "DonutStockWorkerRouter")

def receive = {

case checkStock @ CheckStock(name) =>

log.info(s"Checking stock for $name donut")

// We forward any work to be carried by worker actors within the pool

donutStockWorkerRouterPool forward checkStock

}

}

Our DonutStockWorkerActor is unchanged from our previous examples, as it simply runs the findStock() operation for messages of type CheckStock.

println("\ntep 4: Worker Actor called DonutStockWorkerActor")

class DonutStockWorkerActor extends Actor with ActorLogging {

override def postRestart(reason: Throwable): Unit = {

log.info(s"restarting ${self.path.name} because of $reason")

}

def receive = {

case CheckStock(name) =>

sender ! findStock(name)

}

def findStock(name: String): Int = {

log.info(s"Finding stock for donut = $name, thread = ${Thread.currentThread().getId}")

100

}

}

We create a DonutStockActor within our ActorySystem by calling the system.actorOf() method.

println("\nStep 5: Define DonutStockActor")

val donutStockActor = system.actorOf(Props[DonutStockActor], name = "DonutStockActor")

We fire a bunch of requests to the DonutStockActor with messages of type CheckStock. Since DonutStockActor is using the ScatterGatherFirstCompletedPool from Step 3, it will fan-out the request to a bunch of DonutStockWorkerActor, but only accept the first completed operation.

println("\nStep 6: Use Akka Ask Pattern and send a bunch of requests to DonutStockActor")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaStockRequests = (1 to 2).map(i => (donutStockActor ? CheckStock("vanilla")).mapTo[Int])

for {

results <- Future.sequence(vanillaStockRequests)

} yield (println(s"vanilla stock results = $results"))

Thread.sleep(5000)

Finally, we close our actor system by calling the terminate() method.

println("\nStep 7: Close the actor system")

val isTerminated = system.terminate()

You will notice that for each "Checking stock for vanilla donut" request, 5 requests (given the lowerBound = 5 for the ScatterGatherFirstCompletedPool) for "Finding stock for donut ..." are fired. Only the first result from whichever of these requests are "first completed" will be returned back.

[INFO] [07/06/2018 20:08:11.863] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:08:11.868] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$a] Finding stock for donut = vanilla, thread = 17

[INFO] [07/06/2018 20:08:11.868] [DonutStoreActorSystem-akka.actor.default-dispatcher-7] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$b] Finding stock for donut = vanilla, thread = 19

[INFO] [07/06/2018 20:08:11.868] [DonutStoreActorSystem-akka.actor.default-dispatcher-9] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$c] Finding stock for donut = vanilla, thread = 21

[INFO] [07/06/2018 20:08:11.868] [DonutStoreActorSystem-akka.actor.default-dispatcher-6] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 18

[INFO] [07/06/2018 20:08:11.868] [DonutStoreActorSystem-akka.actor.default-dispatcher-9] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$e] Finding stock for donut = vanilla, thread = 21

[INFO] [07/06/2018 20:08:11.871] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:08:11.871] [DonutStoreActorSystem-akka.actor.default-dispatcher-9] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$a] Finding stock for donut = vanilla, thread = 21

[INFO] [07/06/2018 20:08:11.871] [DonutStoreActorSystem-akka.actor.default-dispatcher-6] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$b] Finding stock for donut = vanilla, thread = 18

[INFO] [07/06/2018 20:08:11.872] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$c] Finding stock for donut = vanilla, thread = 14

[INFO] [07/06/2018 20:08:11.872] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 17

[INFO] [07/06/2018 20:08:11.872] [DonutStoreActorSystem-akka.actor.default-dispatcher-6] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$e] Finding stock for donut = vanilla, thread = 18

vanilla stock results = Vector(100, 100)

TailChoppingPool

We continue with our Akka Routers tutorials, and introduce the TailChoppingPool router. This router is fairly similar to our previous ScatterGatherFirstCompletedPool router. It fans-out requests to a bunch of routees, and only accepts the first completed operation. So far, this would be a similar behaviour to the ScatterGatherFirstCompletedPool. However, the TailChoppingPool fans-out request to additional routees incrementally according to the configuration you specify.

You can review the official Akka documentation for additional details on the TailChoppingPool router.

Our first step is to create the DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

Next, we define the message passing protocols for our Actors.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

case class CheckStock(name: String)

case class WorkerFailedException(error: String) extends Exception(error)

}

We then create the DonutStockActor, which internally defines a TailChoppingPool router. On receipt of CheckStock messages, the DonutStockActor will forward the request to a TailChoppingPool of DonutStockWorkerActors. This means that DonutStockActor will incrementally fan-out CheckStock messages to DonutStockWorkerActors, as per the configuration intervals of the TailChoppingPool. As a reminder, only the first request will be accepted, and all others will be discarded.

println("\nStep 3: Create DonutStockActor")

class DonutStockActor extends Actor with ActorLogging {

override def supervisorStrategy: SupervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 3, withinTimeRange = 5 seconds) {

case _: WorkerFailedException =>

log.error("Worker failed exception, will restart.")

Restart

case _: Exception =>

log.error("Worker failed, will need to escalate up the hierarchy")

Escalate

}

// We will not create a single worker actor.

// val workerActor = context.actorOf(Props[DonutStockWorkerActor], name = "DonutStockWorkerActor")

// We are using a resizable TailChoppingPool.

val workerName = "DonutStockWorkerActor"

val resizer = DefaultResizer(lowerBound = 5, upperBound = 10)

val props = TailChoppingPool(

nrOfInstances = 5,

resizer = Some(resizer),

within = 5 seconds,

interval = 10 millis,

supervisorStrategy = supervisorStrategy

).props(Props[DonutStockWorkerActor])

val donutStockWorkerRouterPool: ActorRef = context.actorOf(props, "DonutStockWorkerRouter")

def receive = {

case checkStock @ CheckStock(name) =>

log.info(s"Checking stock for $name donut")

donutStockWorkerRouterPool forward checkStock

}

}

Similar to our previous router examples, DonutStockWorkerActor calls the findStock() operation when it receives a CheckStock message.

println("\ntep 4: Worker Actor called DonutStockWorkerActor")

class DonutStockWorkerActor extends Actor with ActorLogging {

override def postRestart(reason: Throwable): Unit = {

log.info(s"restarting ${self.path.name} because of $reason")

}

def receive = {

case CheckStock(name) =>

sender ! findStock(name)

}

def findStock(name: String): Int = {

log.info(s"Finding stock for donut = $name, thread = ${Thread.currentThread().getId}")

100

}

}

By calling the system.actorOf() method, we create a DonutStockActor.

println("\nStep 5: Define DonutStockActor")

val donutStockActor = system.actorOf(Props[DonutStockActor], name = "DonutStockActor")

We fire a bunch of CheckStock messages to DonutStockActor. The TailChoppingPool router of DonutStockActor will incrementally fan-out additional requests to DonutStockWorkerActor as defined by the configurations in Step 3.

println("\nStep 6: Use Akka Ask Pattern and send a bunch of requests to DonutStockActor")

import DonutStoreProtocol._

import akka.pattern._

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

val vanillaStockRequests = (1 to 2).map(i => (donutStockActor ? CheckStock("vanilla")).mapTo[Int])

for {

results <- Future.sequence(vanillaStockRequests)

} yield println(s"vanilla stock results = $results")

Thread.sleep(5000)

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [07/06/2018 20:39:18.151] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:39:18.160] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$e] Finding stock for donut = vanilla, thread = 15

[INFO] [07/06/2018 20:39:18.161] [DonutStoreActorSystem-akka.actor.default-dispatcher-2] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 13

[INFO] [07/06/2018 20:39:18.162] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:39:18.162] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 14

vanilla stock results = Vector(100, 100)

BroadcastPool

In our previous Akka Routers tutorials, we've shown the RoundRobinPool, the ScatterGatherFirstCompletedPool, and the TailChoppingPool. We continue our Akka Routers series, and introduce the BroadcastPool router. As its name implies, this router will broadcast or fan-out messages to all of its routees. Perhaps, in a real life scenario, the Akka BroadcastPool router can be very handy if you are implementing some Gossip Protocol, where messages are spread throughout a network. You can find additional information on the Akka BroadcastPool router from the official Akka documentation.

By now, you should be familiar with our first step where we create the ActorSystem: DonutStoreActorSystem.

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

For Step 2, we define the message passing protocols for our Actors.

println("\nStep 2: Define the message passing protocol for our DonutStoreActor")

object DonutStoreProtocol {

case class Info(name: String)

case class CheckStock(name: String)

case class WorkerFailedException(error: String) extends Exception(error)

}

We create the DonutStockActor by extending the usual akka.actor.Actor trait. When DonutStockActor receives messages of type CheckStock, it will use a BroadcastPool to fan-out the request to the underlying DonutStockWorkerActor in the pool.

println("\nStep 3: Create DonutStockActor")

class DonutStockActor extends Actor with ActorLogging {

import scala.concurrent.duration._

implicit val timeout = Timeout(5 second)

override def supervisorStrategy: SupervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 3, withinTimeRange = 5 seconds) {

case _: WorkerFailedException =>

log.error("Worker failed exception, will restart.")

Restart

case _: Exception =>

log.error("Worker failed, will need to escalate up the hierarchy")

Escalate

}

// We will not create a single worker actor.

// val workerActor = context.actorOf(Props[DonutStockWorkerActor], name = "DonutStockWorkerActor")

// We are using a resizable BroadcastPool.

val workerName = "DonutStockWorkerActor"

val resizer = DefaultResizer(lowerBound = 5, upperBound = 10)

val props = BroadcastPool(

nrOfInstances = 5,

resizer = None,

supervisorStrategy = supervisorStrategy

).props(Props[DonutStockWorkerActor])

val donutStockWorkerRouterPool: ActorRef = context.actorOf(props, "DonutStockWorkerRouter")

def receive = {

case checkStock @ CheckStock(name) =>

log.info(s"Checking stock for $name donut")

donutStockWorkerRouterPool ! checkStock

}

}

DonutStockWorkerActor simply calls the findStock() operation when it receives a CheckStock message.

println("\ntep 4: Worker Actor called DonutStockWorkerActor")

class DonutStockWorkerActor extends Actor with ActorLogging {

override def postRestart(reason: Throwable): Unit = {

log.info(s"restarting ${self.path.name} because of $reason")

}

def receive = {

case CheckStock(name) =>

sender ! findStock(name)

}

def findStock(name: String): Int = {

log.info(s"Finding stock for donut = $name, thread = ${Thread.currentThread().getId}")

100

}

}

We create a DonutStockActor in our ActorSystem from Step 1 by calling the system.actorOf() method.

println("\nStep 5: Define DonutStockActor")

val donutStockActor = system.actorOf(Props[DonutStockActor], name = "DonutStockActor")

We use the Akka Tell Pattern, to fire a single CheckStock request to DonutStockActor. Note, however, since DonutStockActor is using a BroadcastPool actor with nrOfInstances defined in Step 3 as 5, we would expect 5 messages to be fanned-out to DonutStockWorkerActors.

println("\nStep 6: Use Akka Tell Pattern and send a single request to DonutStockActor")

import DonutStoreProtocol._

donutStockActor ! CheckStock("vanilla")

Thread.sleep(5000)

Finally, we close our ActorSystem by calling the terminate() method.

println("\nStep 7: Close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

[INFO] [07/06/2018 20:06:42.886] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor] Checking stock for vanilla donut

[INFO] [07/06/2018 20:06:42.891] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$b] Finding stock for donut = vanilla, thread = 14

[INFO] [07/06/2018 20:06:42.891] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$c] Finding stock for donut = vanilla, thread = 15

[INFO] [07/06/2018 20:06:42.891] [DonutStoreActorSystem-akka.actor.default-dispatcher-3] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$d] Finding stock for donut = vanilla, thread = 14

[INFO] [07/06/2018 20:06:42.891] [DonutStoreActorSystem-akka.actor.default-dispatcher-5] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$a] Finding stock for donut = vanilla, thread = 17

[INFO] [07/06/2018 20:06:42.892] [DonutStoreActorSystem-akka.actor.default-dispatcher-4] [akka://DonutStoreActorSystem/user/DonutStockActor/DonutStockWorkerRouter/$e] Finding stock for donut = vanilla, thread = 15

Akka default dispatcher

In this section, we'll go over Akka dispatchers. By now, you should be familiar that an ActorSystem is designed from the ground up to be asynchronous and non-blocking, and we've interfaced with our actor systems using the Tell Pattern or the Ask Pattern.

At the heart of the actor system is Akka's dispatcher which, to quote the official Akka documentation, is what makes Akka Actors “tick”. The dispatcher, though, requires an executor to provide it with threads necessary to run operations in a non-blocking fashion. By default, if you do not specify a particular executor, Akka will make use of the fork-join-executor, and as per the official documentation, this type of executor will provide excellent performance for most cases.

Thread tuning is, however, an important part of any actor system, and as we will see later on in this section, Akka provides you with all the required hooks to tune your dispatcher, executor and threading constructs to meet your particular use cases. For now, we'll get familiar with Akka's default dispatcher, and how you can peek into its configuration. We start off by creating our usual Actor System:

println("Step 1: Create an actor system")

val system = ActorSystem("DonutStoreActorSystem")

To peek into Akka's default dispatcher configuration, you need to access the akka.actor.default-dispatcher config.

println("\nStep 2: Akka default dispatcher config")

val defaultDispatcherConfig = system.settings.config.getConfig("akka.actor.default-dispatcher")

println(s"akka.actor.default-dispatcher = $defaultDispatcherConfig")

The default dispatcher in itself holds various parameters, and below we show a snapshot of some of the most common ones, namely type, throughput, fork-join-executor.parallelism-min and fork-join.executor.parallelism.max.

println("\nStep 3: Akka default dispatcher type")

val dispatcherType = defaultDispatcherConfig.getString("type")

println(s"$dispatcherType")

println("\nStep 4: Akka default dispatcher throughput")

val dispatcherThroughput = defaultDispatcherConfig.getString("throughput")

println(s"$dispatcherThroughput")

println("\nStep 5: Akka default dispatcher minimum parallelism")

val dispatcherParallelismMin = defaultDispatcherConfig.getInt("fork-join-executor.parallelism-min")

println(s"$dispatcherParallelismMin")

println("\nStep 6: Akka default dispatcher maximum parallelism")

val dispatcherParallelismMax = defaultDispatcherConfig.getInt("fork-join-executor.parallelism-max")

println(s"$dispatcherParallelismMax")

Finally, we close our actor system by calling the usual system.terminate().

println("\nStep 7: Close the actor system")

val isTerminated = system.terminate()

You should see the following output when you run your Scala application in IntelliJ:

Step 1: Create an actor system

Step 2: Akka default dispatcher config

akka.actor.default-dispatcher = Config(SimpleConfigObject({"affinity-pool-executor":{"fair-work-distribution":{"threshold":128},"idle-cpu-level":5,"parallelism-factor":0.8,"parallelism-max":64,"parallelism-min":4,"queue-selector":"akka.dispatch.affinity.FairDistributionHashCache","rejection-handler":"akka.dispatch.affinity.ThrowOnOverflowRejectionHandler","task-queue-size":512},"attempt-teamwork":"on","default-executor":{"fallback":"fork-join-executor"},"executor":"default-executor","fork-join-executor":{"parallelism-factor":3,"parallelism-max":64,"parallelism-min":8,"task-peeking-mode":"FIFO"},"mailbox-requirement":"","shutdown-timeout":"1s","thread-pool-executor":{"allow-core-timeout":"on","core-pool-size-factor":3,"core-pool-size-max":64,"core-pool-size-min":8,"fixed-pool-size":"off","keep-alive-time":"60s","max-pool-size-factor":3,"max-pool-size-max":64,"max-pool-size-min":8,"task-queue-size":-1,"task-queue-type":"linked"},"throughput":5,"throughput-deadline-time":"0ms","type":"Dispatcher"}))

Step 3: Akka default dispatcher type

Dispatcher

Step 4: Akka default dispatcher throughput

5

Step 5: Akka default dispatcher minimum parallelism

8

Step 6: Akka default dispatcher maximum parallelism

64

Step 7: Close the actor system

NOTE:

- Depending on your operating systems and hardware configurations, you may see different results with respect to some of the default parameters for Akka's default executor.

Akka Lookup Dispatcher